Making Sense of Mistakes: Implementing Formative Assessment to Improve Student Learning

Sheila Edstrom & Ryan Fedewa - Science Division

Every teacher, regardless of school or content area, has challenges to tackle in helping students learn. For AP Physics C (calculus-based physics), one of the unique features is that students need to speak in the language of fairly advanced mathematics while reasoning through increasingly abstract content. Further, there are multiple pathways to approach a particular content area, and mastery of one approach does not necessarily guarantee mastery in another. How do we help all kids tackle the content of the course, while providing ample opportunities for each of them to succeed?

Perhaps the greatest challenge we faced in answering this question is in serving the needs of the increasingly diverse set of students in our classes. This diversity is a fairly recent phenomenon, as we have watched the course more than triple in size in less than five years.

With this growth came varying skill sets, including mathematical ability, engineering and science backgrounds, and even personal motivation.

Success, to us, meant equity across this increased population. Obviously, we wanted our students to be prepared for college, and more than that, we wanted to build their cognitive and metacognitive thinking skills as much as possible. The latter set into motion a need to differentiate instruction, as well as a need to examine the means in which individual student growth could be communicated.

Our Initial Approach

We noticed that our students tend to struggle with multiple choice questions where the opportunity for partial credit is absent. We figured preparing the students to tackle these questions was a good place to start. We built a collection of weekly formative assessments (WFAs), each consisting of 5 to 10 multiple choice questions.

We then built a system to analyze student performance on these questions. We decided to “tag” each question in Mastery Manager with both a content area identifier linked with a specific science skill identifier. We did the same for other assessments, both formative and summative. Our hope was that we could pull reports for individual students or classes on specific identifiers in order to track individual and collective student performance.

While that sounds like a great idea, it did not work as hoped. Our first framework included 50 to 75 content areas, each linked to 5 different science skills (totaling 350 unique tags through which each of our questions were filtered). Even when pooling data from WFAs, unit exams, review assignments, and practice and final exams, most content areas had too few questions to do meaningful analysis on student or class performance. In other words, it was impossible to infer any trends.

We eventually realized our problem. We were diving too deep. We took a step backwards and reduced our content area tags to our 12 unit topics. We also tagged the content area and the science skills separately, whereas initially we linked them together. In other words, we initially had a Newton’s Second Law - Graphing tag as well as a Newton’s Second Law – Calculus tag. By creating two separate groups of tags, we effectively went from 350 unique tags through which each of our questions were filtered to 12 content tags and 5 science skills, each tagged separately.

Now, we can filter data based on content OR skill, or both. While it seems like a simple change, it took an entire year of data collection to reach this point. Luckily, Mastery Manager continued to store all data we input into it.

Changing the tags simply changed the way in which this data could be compiled, and likewise, the reports we were able to run. Trends (individual, class, teacher, course) could now be seen.

We believe that our system of tagging questions could be applied to many other subject areas. For example, a foreign language class may find that each content area is useful for a tag, as we did, and then tag skills such as reading, writing, speaking, and listening that are interwoven through each unit, like our five science skills are. A math class might use solving, graphing, modeling, simplifying, and analysis as their skills. Teachers in those areas would know better than we do which tags fit their classes best, but the paradigm of content areas and 4-6 skills is hopefully applicable across departments.

Analyzing Data and Feedback

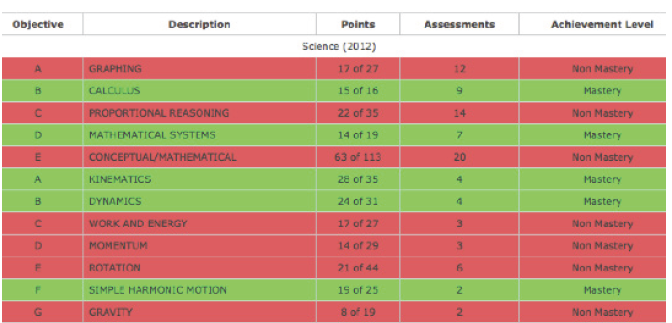

One of the best reasons to undertake a somewhat time-intensive process like this is that we are able to speak to our students who are struggling in the class. If you look at Figure 1.1, you can see a “sample” student from a previous year after one semester of the class.

Perhaps the greatest challenge we faced in answering this question is in serving the needs of the increasingly diverse set of students in our classes. This diversity is a fairly recent phenomenon, as we have watched the course more than triple in size in less than five years.

With this growth came varying skill sets, including mathematical ability, engineering and science backgrounds, and even personal motivation.

Success, to us, meant equity across this increased population. Obviously, we wanted our students to be prepared for college, and more than that, we wanted to build their cognitive and metacognitive thinking skills as much as possible. The latter set into motion a need to differentiate instruction, as well as a need to examine the means in which individual student growth could be communicated.

Our Initial Approach

We noticed that our students tend to struggle with multiple choice questions where the opportunity for partial credit is absent. We figured preparing the students to tackle these questions was a good place to start. We built a collection of weekly formative assessments (WFAs), each consisting of 5 to 10 multiple choice questions.

We then built a system to analyze student performance on these questions. We decided to “tag” each question in Mastery Manager with both a content area identifier linked with a specific science skill identifier. We did the same for other assessments, both formative and summative. Our hope was that we could pull reports for individual students or classes on specific identifiers in order to track individual and collective student performance.

While that sounds like a great idea, it did not work as hoped. Our first framework included 50 to 75 content areas, each linked to 5 different science skills (totaling 350 unique tags through which each of our questions were filtered). Even when pooling data from WFAs, unit exams, review assignments, and practice and final exams, most content areas had too few questions to do meaningful analysis on student or class performance. In other words, it was impossible to infer any trends.

We eventually realized our problem. We were diving too deep. We took a step backwards and reduced our content area tags to our 12 unit topics. We also tagged the content area and the science skills separately, whereas initially we linked them together. In other words, we initially had a Newton’s Second Law - Graphing tag as well as a Newton’s Second Law – Calculus tag. By creating two separate groups of tags, we effectively went from 350 unique tags through which each of our questions were filtered to 12 content tags and 5 science skills, each tagged separately.

Now, we can filter data based on content OR skill, or both. While it seems like a simple change, it took an entire year of data collection to reach this point. Luckily, Mastery Manager continued to store all data we input into it.

Changing the tags simply changed the way in which this data could be compiled, and likewise, the reports we were able to run. Trends (individual, class, teacher, course) could now be seen.

We believe that our system of tagging questions could be applied to many other subject areas. For example, a foreign language class may find that each content area is useful for a tag, as we did, and then tag skills such as reading, writing, speaking, and listening that are interwoven through each unit, like our five science skills are. A math class might use solving, graphing, modeling, simplifying, and analysis as their skills. Teachers in those areas would know better than we do which tags fit their classes best, but the paradigm of content areas and 4-6 skills is hopefully applicable across departments.

Analyzing Data and Feedback

One of the best reasons to undertake a somewhat time-intensive process like this is that we are able to speak to our students who are struggling in the class. If you look at Figure 1.1, you can see a “sample” student from a previous year after one semester of the class.

If this student asked for guidance in how to study, we could offer a more personalized set of recommendations. Based on the data, we can see that the student is clearly mathematically talented, as seen in the scores in the ‘calculus’ and ‘mathematical systems’ skills.

This conclusion is supported by the units in which this individual has excelled: ‘kinematics’, ‘dynamics’, and ‘simple harmonic motion’ are all very mathematical units, and this student likely did well in those units based on mathematical strengths. However, in the units that are more conceptual, utilizing the skills that rely less on mathematical analysis, the student struggles. In other words, instead of working on solving more problems, this student should try reading the book, writing out explanations in words, focusing on ranking tasks, or listening to an online lecture for the why of the problem, not the how of the problem.

In addition to providing feedback relating to the trends of individual student strengths and weaknesses, we can also identify class, teacher, and even course, trends. With that identification, adjustment to teaching can happen, both in conscious decisions we make to address areas of weakness, and in the involvement of students by the questions they pose as a result of the feedback provided. Furthermore, this additional interaction between students and teacher fosters student accountability and responsibility. Students have the opportunity to assess themselves, and then take a more active role in identifying how to improve.

Perhaps a final benefit to our tagging system, and resulting pedagogy, is that we now are intimately connected to the content in our exams, especially in how they relate to the skills we assess. In a sense, it helped us to experience the tagging process, mistakes included. By tagging each question, we identified which skills we were assessing, and how much we assessed it. As a result, we were able to make sure our exams were balanced; each skill needs to be represented in the amount that makes sense for that unit, both in terms of the physics, and in relation to the value the College Board places on it in that content area. In doing so, we have a greater confidence in the exams we give our students, as they are providing what we hope to be an accurate measure of our students’ progress, individually and collectively.